The thesis is: RenderMan Compiler for the RPU Ray Tracing Architecture.

The thesis is: RenderMan Compiler for the RPU Ray Tracing Architecture.

Here are the slides in PDF and SXI format.

Now in the process I discovered how cool OpenOffice.org Impress was. Before making the slides I only vaguely remembered how totally lame was PowerPoint - especially its math typography looking like it was done by a 4 year old kid, and I considered writing HTML+CSS for Opera the best way of making slides. Now what changed my mind was that Opera doesn't seem to be able to export the slides to any other format (like PDF), and the slides look horrible in any other browsers. Why isn't Firefox compatible here ? It's using almost only official CSS. Well, I'll rant about how Firefox sucks sometime later :-)

So OpenOffice.org Impress is somewhat less lame than PowerPoint. The good thing is that making nice Web 2.0-looking (with gradients) flowcharts is actually easier than with Opera. The huge bad thing is that there are no predefined templates, and the ones I could find online were rather weak - like not having special title slide format, and using a moral equivalent of Comics Sans for slide numbers.

Oh yeah, end of the rant. Just look at the slides and enjoy :-)

The best kittens, technology, and video games blog in the world.

Tuesday, August 29, 2006

Slides for my masters thesis

Friday, August 25, 2006

All your Pluto are belong to us

Getting Things Done methodology is a great thing, especially the "Am I the right person to do it" part. What's the most amazing is how many things get done on their own if you follow it. One such thing happened yesterday. I had on my TODO list since like forever writing something on the Polish Wikipedia on Pluto's controversy as a planet. I mean - we can all see it's totally lame and it shouldn't be one.

Getting Things Done methodology is a great thing, especially the "Am I the right person to do it" part. What's the most amazing is how many things get done on their own if you follow it. One such thing happened yesterday. I had on my TODO list since like forever writing something on the Polish Wikipedia on Pluto's controversy as a planet. I mean - we can all see it's totally lame and it shouldn't be one.

So let's do some GTD on it:

- Would it take less than 2 minutes ? Well, not really - documenting the controversy would take at least an hour or two.

- Am I the right person to do it ? Oh yeah, why should I be doing it - wouldn't it be better to make IAU make Pluto not-a-planet instead, so I don't have to do any work ?

Now, let's delegate the "Free elections in Belarus" item ...

Sunday, August 20, 2006

Programming in Blub

Languages vary in power. Like Paul Graham, let's take a language of intermediate power and call it Blub. Now someone who only speaks Blub can look at less powerful languages and see that they're less powerful. But if they look at more powerful languages, they won't think of them as more powerful - such language are going to look simply weird, as it is often very hard to see the power without actually being fluent in them. Even if you are shown a few examples where some language beats your favourite Blub, you're more likely to think something along the lines of "who would actually need such feature" or "well, it's just some syntactic sugar", not "omg, I've been coding Blub all my life, I have been so damn wrong". I'm not going to criticize such thinking, in many cases weird is just weird, not really more powerful.

Oh by the way, while Paul Graham noticed this paradox, he is also its victim - thinking that his favourite language (Common Lisp) is the most powerful language ever, so when it doesn't have some feature (object-oriented programming), then by definition such feature is "just weird", and not really powerful. Of course we all know better ;-)

Now when you really get those features of a more powerful language, you can sometimes program better even in Blub.

Why am I talking about that ? Because we can move one step forward and consider Ruby our new Blub. It is pretty much the most powerful general purpose language right now, but it still doesn't taze a few nice features natively :-) Fortunately we can try to understand and emulate them.

Multiple dispatch - Think about addition. Addition can easily be a method (like in Ruby or Smalltalk). You send one object a message (+, other_object). So far so good. Now how about adding Complex class ? Complex's method + must know how to add Complex numbers and normal Float numbers. But how can we tell Float what to do about Complex arguments ? Float#+ is already taken and doesn't have a clue about Complex numbers. We need to do some ugly hacks like redefining +. Or some more complex example - pattern matching. Regexp matches String. We want to implement pattern Parser that matches String. And make Regexp match IOStream. It's ugly without multiple dispatch.

Of course Ruby is not a Blub and we can easily make defgeneric ;-)

Languages vary in power. Like Paul Graham, let's take a language of intermediate power and call it Blub. Now someone who only speaks Blub can look at less powerful languages and see that they're less powerful. But if they look at more powerful languages, they won't think of them as more powerful - such language are going to look simply weird, as it is often very hard to see the power without actually being fluent in them. Even if you are shown a few examples where some language beats your favourite Blub, you're more likely to think something along the lines of "who would actually need such feature" or "well, it's just some syntactic sugar", not "omg, I've been coding Blub all my life, I have been so damn wrong". I'm not going to criticize such thinking, in many cases weird is just weird, not really more powerful.

Oh by the way, while Paul Graham noticed this paradox, he is also its victim - thinking that his favourite language (Common Lisp) is the most powerful language ever, so when it doesn't have some feature (object-oriented programming), then by definition such feature is "just weird", and not really powerful. Of course we all know better ;-)

Now when you really get those features of a more powerful language, you can sometimes program better even in Blub.

Why am I talking about that ? Because we can move one step forward and consider Ruby our new Blub. It is pretty much the most powerful general purpose language right now, but it still doesn't taze a few nice features natively :-) Fortunately we can try to understand and emulate them.

Multiple dispatch - Think about addition. Addition can easily be a method (like in Ruby or Smalltalk). You send one object a message (+, other_object). So far so good. Now how about adding Complex class ? Complex's method + must know how to add Complex numbers and normal Float numbers. But how can we tell Float what to do about Complex arguments ? Float#+ is already taken and doesn't have a clue about Complex numbers. We need to do some ugly hacks like redefining +. Or some more complex example - pattern matching. Regexp matches String. We want to implement pattern Parser that matches String. And make Regexp match IOStream. It's ugly without multiple dispatch.

Of course Ruby is not a Blub and we can easily make defgeneric ;-)

def defgeneric(name, class0, *classes, &block)

old_method = begin class0.instance_method(name) rescue nil end

class0.send(:define_method, name) {|*args|

if classes.zip(args).all?{|c,a| a.is_a? c}

block.call(self, *args)

elsif old_method

old_method.bind(self).call(*args)

else

method_missing(name, *args)

end

}

end

defgeneric(:+, Complex, Object) {|a,b|

a + Complex.new(b)

}

defgeneric(:+, Complex, Complex) {|a,b|

Complex.new(a.re + b.re, a.im + b.im)

}

defgeneric(:+, Float, Complex) {|a,b|

Complex.new(a) + b

}["a", "b", "c"].enum_with_index.map{|a,i| "#{i}: #{a}"} # ["0: a", "1: b", "2: c"]let rec sum = function

| [] -> 0

| hd::tl -> hd + (sum tl)def sum(x)

case x

when []

0

when [_{hd}, *_{tl}]

hd + sum(tl)

end

endif xml_node =~ xmlp(_{name}, {:color=>_{c}}, /Hello, (\S+)/)

print "A #{c}-colored #{name} greets #{$1} !"

endThursday, August 17, 2006

magic/xml tutorial

I wrote a magic/xml tutorial. It documents how to use magic/xml to load, build, and query XML documents. I tried to limit it to the most important things.

I wrote a magic/xml tutorial. It documents how to use magic/xml to load, build, and query XML documents. I tried to limit it to the most important things.

Enjoy the tutorial, and if you have any questions, just ask :-)

Wednesday, August 16, 2006

Movie reviews

Movies I watched recently, more or less in order of enjoyability, all from IMDB Top 100:

Movies I watched recently, more or less in order of enjoyability, all from IMDB Top 100:

- Blade Runner (1982, 8.3, #99) - This movie is absolutely great. In fact much better than the book. Unfortunetaly there is no way I can tell you what's so great about it without spoiling - just be sure to watch the director's cut (hint hint).

- Singin’ in the Rain (1952, 8.4, #68) - Normally I have low tolerance for musicals, but this one is wonderful. The characters of Don Lockwood, Lina Lamont, and Kathy Selden are just great compared to what is typically being served in the romance/commedy kind of movies. If you want to see just one musical in your life - check out this one.

- To Kill a Mockingbird (1962, 8.5, #41) - A movie that at the same times exists in a highly unreal world filled with magic, and does serious social commentary on the 1930s' American South. Like Dogville, except for being much less dark.

- The Maltese Falcon (1941, 8.4, #57) - Noir classic. Multilayer conspiracies, murders, ruthlessness, big money, and evil women all over the place.

- The Sting (1973, 8.3, #81) - a classic of the "con artist" genre that we all love so much. As usual, there are conspiracies within conspiracies, and nothing is quite the way it seems. Definitely a fun watch.

- The Treasure of the Sierra Madre (1948, 8.5, #52) - A bunch of guys go search for gold in the Sierra Madre. But sitting on bags of gold worth more than they ever earned in their lives can easily lead to paranoia ...

- Mr. Smith Goes to Washington (1939, 8.3, #87) - yay, a very early movie about corruption in national politics. Some naive guy gets to become a replacement senator, when the regular one dies during the term. Then when he tries to actually be a senator and not just a puppet, he gets confronted with the power of some rich guy who controls all politicians in the whole state. Pretty funny.

- On the Waterfront (1954, 8.3, #84) - the workers and a priest struggle against exploitation by mob-controlled labour unions. Mildly noir, somewhat enjoyable. Oh yeah, and the director was an asshole.

- Modern Times (1936, 8.5, #66) - some Charlie Chaplin stuff. Watchable, but I wasn't terribly impressed. The Great Dictator was somehow more enjoyable.

- School Rumble - Hypergenki high school commedy. Harima loves Tenma, Tenma loves Karasuma (the whole network of unreturned love interests is getting even more complex later), everybody is totally weird and doing absurd things all the time. Plenty of fun to watch.

- High School Girls - Ecchi high school commedy. It proves that there is such things as too many panty-shots. Totally awesome.

- Magical Girl Lyrical Nanoha - Totally ubercute (and they did actually say "mahou shoujo" in the title, congratulations). One feels there wasn't enough plot for the whole season, and the characters are rather bland (except for Fate, who is actually great), and ubercuteness may not be enough to save the series.

Thursday, August 10, 2006

magic/xml beats XQuery at W3C's XML Query Use Cases

It is now official - magic/xml is the best XML library ever ;-). magic/xml solutions to W3C XML Query Use Cases problem set are on average whole 1% smaller than XQuery solutions, and it probably does far better than that on more neutral benchmarks.

It is now official - magic/xml is the best XML library ever ;-). magic/xml solutions to W3C XML Query Use Cases problem set are on average whole 1% smaller than XQuery solutions, and it probably does far better than that on more neutral benchmarks.

The Use Cases were really cool. Having a set of "real" (well, real enough) third-party problems made it possible to make magic/xml even more expressive. If I relied on my problems, they would probably contain my biases on what's the "right" way to process XML. Full sources can be viewed at magic/xml's website, including pretty-printed comparison of all solutions (pretty printing by Coderay). Here's just a short summary of cool new features.

XML.load(source) - stolen from CDuce's load_xml. source can be a file name, URL or a file handler. Oh yeah, and it supports "real" HTTPS now.

Pseudoattributes - XML has real attributes, but some people insists on using dummy elements. So you see a lot of <foo><bar>Hello</bar></foo>. magic/xml lets you pretend these are "real" attributes. You can read and even write to them and it will do the right thing: node[:@bar] += ", world!".

Multielement children/descendant paths - node.children(:p, :*, :ul, :li, :*, :a) can find links inside elements of unordered lists inside paragraphs. Well like XPath /p//ul/li//a basically. Now I could have implemented XPath, but we're already beating XQuery anyway, and I have a vague feeling that letting arbitrary objects be path elements we can do some really cool things using === and =~.

node =~ /regular expression/ - just a small cool thing, node =~ :foo matches if node has tag foo, node =~ /regular expression/ matches if text inside it (with markup stripped) matches, and so on. It's not that cool on its own, but if connected with multielement paths it could be something extremely powerful.

tree fragments - ever wanted to extract "part of BODY between second H1 and the first TABLE" ? node.range(start, end) and node.subsequence(start, end) can do the right thing for you (either including parents or not). The programs will become much easier to understand than iterating over individual nodes and checking whether they're in the right range or not.

So basically you now have 57 realistic examples of using magic/xml for XML parsing, enjoy :-)

Amethyst

This is some old stuff, but I haven't blogged about it before so here it goes. Amethyst is basically Perl with Ruby syntax and you can get it here.

What it does is parsing Amethyst source (with Ruby-like syntax) using Parse::RecDescent, generating Perl code for the whole program at once (this is a very fragile part), and eval'ing it. Now Ruby and Perl have totally different sematics, so Amethyst hacks around both a lot - for example it can tell whether a Perl object "is" a string, or a number, so + operator acts the expected way on both, even though Perl pretends not to know that.

Some examples. Iterators work:

This is some old stuff, but I haven't blogged about it before so here it goes. Amethyst is basically Perl with Ruby syntax and you can get it here.

What it does is parsing Amethyst source (with Ruby-like syntax) using Parse::RecDescent, generating Perl code for the whole program at once (this is a very fragile part), and eval'ing it. Now Ruby and Perl have totally different sematics, so Amethyst hacks around both a lot - for example it can tell whether a Perl object "is" a string, or a number, so + operator acts the expected way on both, even though Perl pretends not to know that.

Some examples. Iterators work:

arr = [1,2,3]

arr.each{|element| print(element,"\n")}($arr = [1,2,3]);

call(

method($arr,'each'),

sub{

my ($element)=@_;

{call(function('print'), undef, $element, "\n")}

},

$arr

);xs = "15"

xi = xs.to_i

yi = 7

ys = yi.to_s

zi = xi + yi

zs = xs + ys

print(zi, "\n")

print(zs, "\n")Saturday, August 05, 2006

W3C's XML Query Use Cases ported to magic/xml

Photo from flickr, by annia316, CC-BY.

Photo from flickr, by annia316, CC-BY.

<bib>

{

for $b in doc("http://bstore1.example.com/bib.xml")/bib/book

where $b/publisher = "Addison-Wesley" and $b/@year > 1991

return

<book year="{ $b/@year }">

{ $b/title }

</book>

}

</bib>XML.bib! {

XML.from_file('bib.xml').children(:book) {|b|

if b.child(:publisher).text == "Addison-Wesley" and b[:year].to_i > 1991

book!({:year => b[:year]}, b.children(:title))

end

}

}declare function local:toc($book-or-section as element()) as element()*

{

for $section in $book-or-section/section

return

<section>

{ $section/@* , $section/title , local:toc($section) }

</section>

};

<toc>

{

for $s in doc("book.xml")/book return local:toc($s)

}

</toc>def local_toc(node)

node.children(:section).map{|c|

XML.section(c.attrs, c.child(:title), local_toc(c))

}

end

XML.toc! {

add! local_toc(XML.from_file('book.xml'))

}for $s in doc("report1.xml")//section[section.title = "Procedure"]

return ($s//incision)[2]/instrumentXML.from_file('report1.xml').descendants(:section) {|s|

next unless s.child(:"section.title").text == "Procedure"

print s.descendants(:incision)[1].child(:instrument)

}- I don't think any other XML processing library gets results even close to that. So far only special-purpose XML processing languages like XQuery or CDuce were able to be that expressive.

- 10% more characters is very little in exchange for full power of Ruby. And you don't have to learn a new language, working with magic/xml is almost like working with plain Ruby.

- The benchmarks come from XQuery guys and show what is good at, so in a more unbiased selection magic/xml would probably do better than that. For example magic/xml assumes real XML attributes (<a href="http://www.google.com/">Google</a>) are the common case, not dummy tags with text (<a><href> http://www.google.com/<content>Google</content></a>) , while XQuery assumes it the other way around. Then maybe magic/xml should get a few convenience methods for the other case.

- If special XML processing languages aren't significantly more expressive, do we even need them ? Performance comes from good profilers, but expressiveness cannot be hacked onto the system afterwards. So even if magic/xml is slow sometimes, it doesn't really matter.

XML stream processing with magic/xml

- Read them into memory as trees, and have all the cool methods. This works only with rather small XML files, especially since XML data takes more space in memory than on the disk.

- Process them as streams of events. This is usually very inconvenient.

XML.parse_as_twigs(STDIN) {|node|

next unless node.name == :page

node.complete!

t = node.children(:title)[0].contents

i = node.children(:id)[0].contents

print "#{i}: #{t}\n"

}Friday, August 04, 2006

magic/xml really works

require 'magic_xml'

doc = XML.from_url "http://t-a-w.blogspot.com/atom.xml"

doc.children(:entry).children(:link) {|c|

print "#{c[:title]}\n#{c[:href]}\n\n" if c[:rel] == "alternate"

}require 'magic_xml'

deli_passwd = File.read("/home/taw/.delipasswd").chomp

url = "http://taw:#{deli_passwd}@del.icio.us/api/posts/recent?tag=taw+blog+magicxml"

XML.from_url(url).children(:post).reverse.each_with_index {|p,i|

print XML.li("#{i+1}. ", XML.a({:href => p[:href]}, p[:description]))

}XML is huge and ugly

Photo from Commons by Over Fresh, public domain.

Photo from Commons by Over Fresh, public domain.

<foo color="blue"><p>Some text & and a bit more</p><br/>- DTDs. DTDs do not follow the XML syntax, and according to the standard, they can be dumped straight into any XML document and the program is supposed to handle all that. And they do more than just validation ! They can set attribute default values, define text replacements and do a lot of other useless things. This is the worst thing about XML. Of course nobody actually dumps such things into documents, the most people do is a single (and ugly anyway) DOCTYPE declaration, as if we couldn't use MIME types for that.

- Non-standard entities. What does &foo; mean ? Well, it can mean anything. And it really sucks, because the program doesn't want to deal with escaping issues. So the program wants "AT&T", not "AT&T". And what is parser supposed to return when it gets "&foo; & &bar;" ?

- CDATA - Yeah, let's provide a second and completely redundant way of escaping characters to make everyone's life harder.

- XML declarations. These <?xml ... ?> things that can specify version and encoding. As if the standard couldn't simply say "XML documents are encoded in UTF-8".

- Processing instructions. So now every program is supposed to somehow deal with <?mspaint ... ?> randomly splattered through the document. They don't even have to follow the tree structure, so where the heck is the parser supposed to attach them in the parse tree ?

node = XML.foo { bar!("Hello"); bar!({:color => "blue"}, "world") }<foo><bar>Hello</bar><bar color="blue">world</bar></foo>. Enjoy :-)

Tuesday, August 01, 2006

From make to rake

- It is almost standard but not quite - some systems like Solaris use some broken non-GNU make (you need to use gmake there, bah). And without GNU extensions it's really hard to do many useful things with it.

- Complex Makefiles are very fragile, for example changing order of entries has highly unpredictable effects.

- It's really hard to do any non-trivial processing with make. What one really does is writing scripts that generate Makefiles, and then the Makefiles call even other scripts. Having everything in a single Rakefile is much nicer.

- With Rakefile I can program in Ruby. Perl or Python would also work great with this kind of programs. Shell definitely does not.

- Embedding Perl/Ruby one-liners in Makefile really sucks. I need to escape extra characters to protect against make, then to protect against shell. In the end I simply move everything to a different file.

# Build the package using ANTLR

task :default do

system "java -classpath lib/antlr-2.7.5.jar:lib/antlr-3.0ea7.jar:lib/stringtemplate-2.3b4.jar org.antlr.Tool RLispGrammar.g"

end

# Build first if packaging is requested

task :package => :default do

date_string = Time.new.gmtime.strftime("%Y-%m-%d-%H-%M")

files = %w[COPYING README STYLE

antlr.rb RLispGrammar.g

RLispGrammarLexer.rb RLispGrammar.rb

rlisp.rb Rakefile

rlisp_stdlib.rb stdlib.rl] +

Dir.glob("examples/*.rl") +

Dir.glob("examples/*.rb") +

Dir.glob("lib/*.jar")

# Prepend rlisp/ and temporarily chdir out

# That's the only way to work with tar

files = files.map{|f| "rlisp/#{f}"}

Dir.chdir("..") {

# Make a tarball

system "tar", "-z", "-c", "-f", "rlisp/rlisp-#{date_string}.tar.gz", *files

# and a zipball

system "zip", "rlisp/rlisp-#{date_string}.zip", *files

}

end

# Cleaning up

task :clean do

generated_files = %w[

RLispGrammarLexer.tokens

RLispGrammarLexer.rb

RLispGrammar.rb

RLispGrammar.tokens

RLispGrammar.lexer.g]

generated_files.each{|file| File.delete(file)}

endRuby book sales pass Perl

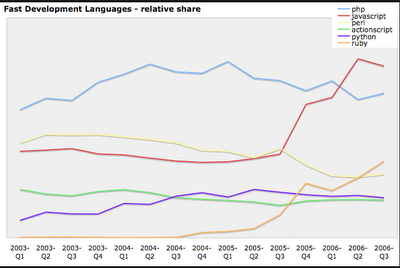

Chart from O'Reilly

Chart from O'ReillySo basically Ruby book sales passed Perl, Python is far behind, and Javascript books are on the top, but are mostly about AJAX not actually programming in Javascript. It seems that Ruby is having huge momentum right now, as book sales indicate what people are learning now and therefore the technical trends for the next few years. So are we already getting there ?

Posted by

taw

at

15:23

0

comments

![]()

magic/xml

Photo from Commons by Fabian Köster, GFDL.

Photo from Commons by Fabian Köster, GFDL.

a = XML.new(:foo, {:a => "1"}, "Hello")

b = a.dup{ @name = :bar }

c = a.dup{ self[:a] = "2" }

d = a.dup{ self << ", world!" }include XHTML

a = A.new({:href => "http://www.google.com/"}, "Google")

a[:href] = "http://earth.google.com/"

a << " Earth"